NLP Learning Series: Part 3 - Attention, CNN and what not for Text Classification

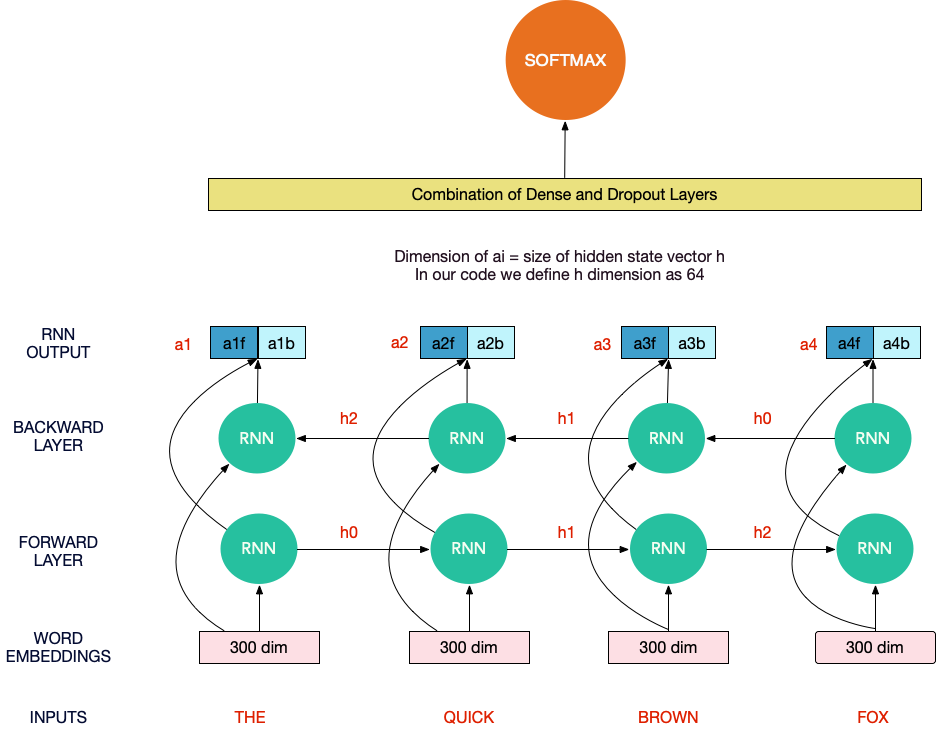

This post is the third post of the NLP Text classification series. To give you a recap, I started up with an NLP text classification competition on Kaggle called Quora Question insincerity challenge. So I thought to share the knowledge via a series of blog posts on text classification. The first post talked about the different preprocessing techniques that work with Deep learning models and increasing embeddings coverage. In the second post , I talked through some basic conventional models like TFIDF, Count Vectorizer, Hashing, etc. that have been used in text classification and tried to access their performance to create a baseline. In this post, I delve deeper into Deep learning models and the various architectures we could use to solve the text Classification problem. To make this post platform generic, I am going to code in both Keras and Pytorch. I will use various other models which we were not able to use in this competition like ULMFit transfer learning approaches in the fourt…

Keep reading with a 7-day free trial

Subscribe to MLWhiz | AI Unwrapped to keep reading this post and get 7 days of free access to the full post archives.