Using XGBoost for time series prediction tasks

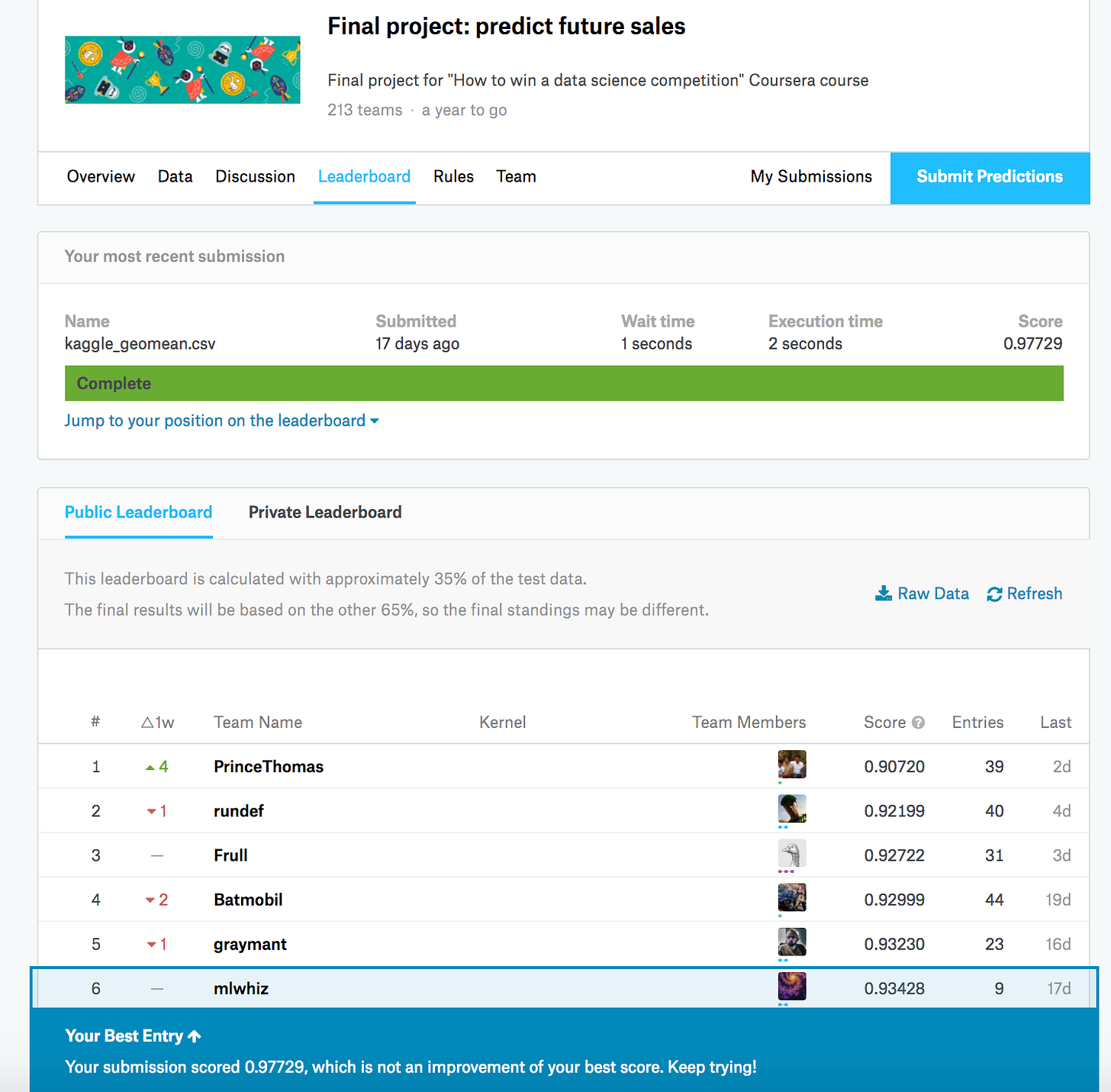

Recently Kaggle master Kazanova along with some of his friends released a “How to win a data science competition” Coursera course. You can start for free with the 7-day Free Trial. The Course involved a final project which itself was a time series prediction problem. Here I will describe how I got a top 10 position as of writing this article.